Usually applications are designed focusing on fast performance and low latency. Based on the choices we go for in terms of infrastructure, software, networking and maintenance of respective operations have an impact on our environment triggering increased Carbon emission.

Sustainable Software Engineering considers the factors of climate

science along with Software Design & Architecture, hardware, electricity,

and Data Center design as well. Some of the principles of Sustainable Software

Engineering that are explained in the link https://docs.microsoft.com/en-us/learn/modules/sustainable-software-engineering-overview/2-overview

can be adopted while building the system

more Carbon efficient way.

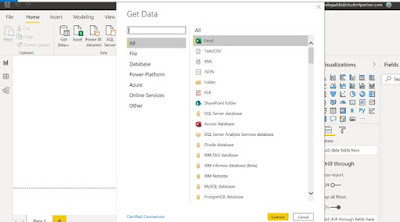

This blog focuses on Migrating the on-prem servers to (Microsoft

Azure) Cloud presenting the opportunity to reduce consumption of energy and

related Carbon emissions. Research has demonstrated Microsoft Cloud which has Serverless

architecture is more energy efficient than other enterprise datacenters.

Cloud servers are used when they are needed and delimit the

usage after the completion of execution which helps sustainability as servers,

storage, and network bandwidth are used to their maximum utilization levels.

Understanding how much power is being consumed will help to

know how much power the server / application consumes. Microsoft Sustainability

Calculator is also being made available for Azure enterprise customers that

provides new insight into carbon emissions data associated with the associated

Azure services. The calculator gives a granular view of the estimated emissions

savings from running workloads on Azure compared to that of a typical

on-premises deployment.

With Microsoft’s Azure Kubernetes Service (AKS) offering,

the process of developing a serverless application and deploying it with a Continuous

Integrated / Delivery experience along with security is simplified. AKS reduces

the complexity and operational overhead of managing Kubernetes. As a hosted

Kubernetes service, Azure handles health monitoring and maintenance. As Kubernetes

masters are managed by Azure, only agent nodes are to be maintained. Overview

and key components of AKS are found here - https://docs.microsoft.com/en-us/azure/aks/intro-kubernetes

Azure Monitor provides details on the cluster's memory and CPU usage which helps to reduce the

carbon footprint of the cluster.

Reducing the amount of idle time for compute resources can

lower the carbon footprint. Reducing idle time involves increasing the

utilization of compute resources – nodes in the cluster. Similarly Sizing the

nodes as per workload needs can help run few nodes at higher utilization. Some

of the other considerations are configurations to control Cluster scales, usage

of spot pools helps to utilize idle capacity within Azure.

Another aspect is to reduce network travel, consider

creating clusters in regions closer to the source of the network traffic. Azure

Traffic Manager can also be used to help with routing traffic to the closest

cluster.

Spot tools can be configured

to change the time or region for a service to run based on the shaping

of the demand.

So Let’s build energy efficient applications and move away from On-Prem wherever feasible to reach the goal to become Carbon Negative!